by Melanie Lindahl

Introduction

Texas Law’s existing degree audit presented a basic, static snapshot of a student’s progress toward fulfilling their main degree requirements. Student feedback made clear that they would benefit greatly from a more robust registration system, one which helped them plan and understand for which courses they should register. Also, our administrators needed the ability to override a student’s degree requirement to allow other courses to count.

Key problems

For current law students

The existing degree audit was bare bones and didn’t list all the degree requirements needed to graduate. It also didn’t allow for registration planning.

For administrators

Administrators could not override degree requirements for individual students, and even simple changes to the audit required IT assistance.

The existing degree audit had minimal information and was not interactive.

Design Phase

The front-end application was designed with three key aspects in mind:

- A straightforward design to understand which degree requirements were complete and which were not.

- An intuitive interface to view how future courses affected degree requirements.

- The ability for administrators to add overrides to degree requirements for individual students.

Thankfully, our design system had established styles and icons that gave us a firm foundation to get started.

The first design iteration for a new degree audit application.

Prototype Testing

Due to holiday timing issues combined with the Spring semester starting, we first obtained feedback from students after we had developed a high-fidelity XD prototype. We set up a table in the law school where we offered our signature “donut = quick chat” testing and feedback station. The takeaways from those informal testing sessions:

- Overwhelmingly, students understood which degree requirements were complete and incomplete.

- Half fully understood how to add planned courses to their degree audit, and the other half sort of understood.

- The majority of students understood how to generate a degree audit with different filters.

- Other desired enhancements included:

- Making incomplete degree requirements more prominent.

- Using different terminology.

- Breaking out certain degree requirements further.

Solutions for Issues Found

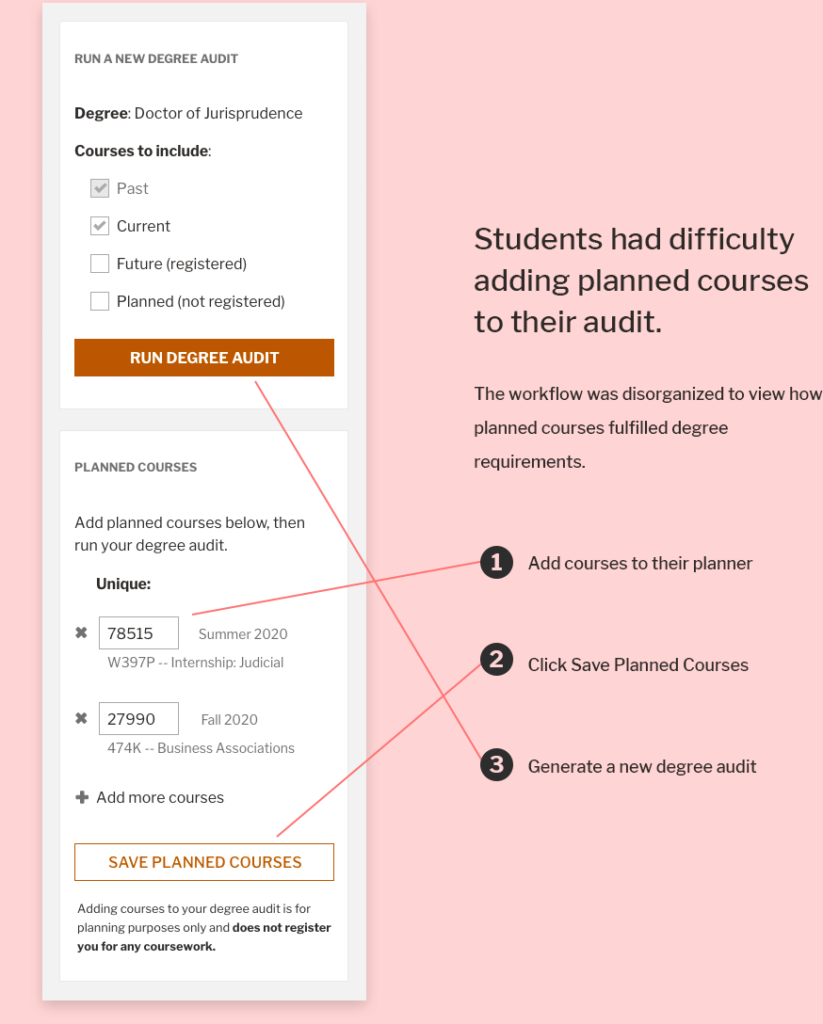

Planned Courses

To help students use their Planned Courses panel more intuitively, we:

- Eliminated a step to re-generate the audit once they saved planned courses.

- Renamed the Planned Courses panel to the more actionable Plan Your Courses.

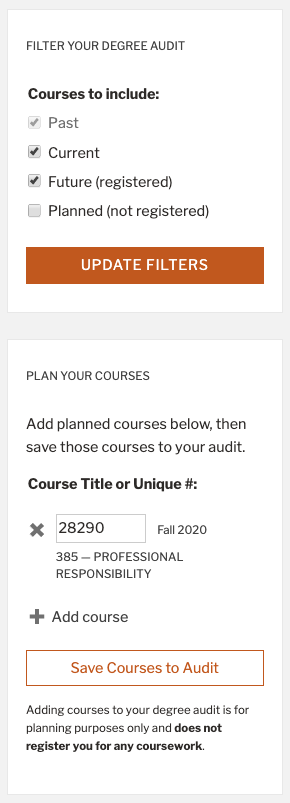

- Reframed the idea of generating a degree audit to using a filtering panel.

- Added an icon next to each planned course (where it appeared in their audit) so students could see how that course counted toward their requirements.

Modernizing

Generating a degree audit was modernized to a filtering concept.

Planned Courses

The number of steps to add planned courses to the audit was reduced.

“Seemed easy to add [a planned course], it’s good to be able to add by title.”

Third-year student

Prominence of Incomplete Requirements

To address the request that incomplete requirements appear more prominently, we placed all degree requirements into three collapsible sections:

- Required First-Year Courses

- Required Advanced Courses

- General Degree Requirements, which included total hour, GPA, and residency requirements.

When all requirements for a section are completed, that section auto-collapses with a larger “complete” icon. Incomplete sections would then appear higher up in the audit and be more visually prominent with uncollapsed sections and red “warning” icons.

Collapsible Sections

Each section of degree requirements was changed to a collapsible panel with an icon to indicate the status of that section.

This change fulfilled the students’ request to have incomplete degree requirements more prominently displayed.

Additionally, the wishes of administrators to keep requirements in chronological order was maintained.

“Dividing it up [into sections] is amazing, so helpful compared to the old one.”

Third-year student

Remote User Testing

In June 2020, we conducted user testing of the final product via Zoom. The pandemic prompted us to try remote testing, which worked out in several ways.

With the students screen sharing from their own device, it was easy to see how they navigated the application while simultaneously viewing their facial cues. Our note-takers were able to be invisible with their video and audio disabled. Additionally, students had more availability since they weren’t bound by when they were physically in the building.

Unanimously, students loved the application and found it very intuitive. We were a little surprised with how quickly they finished their tasks. We did find a couple wording and styling tweaks that would make the experience better.

“I think this is super cool. Is it going to be on Dashboard?”

Third-year student

Data Needs

Student course data already existed in our Oracle database which pulled data from the main campus mainframe. We had existing lists of courses that could count toward each degree requirement. We needed to create new tables to store admin overrides, planned courses, and other smaller audit needs.

Constraints and Limitations

Obtaining feedback from law students was difficult due to semester timing and the students’ intense academic workload. We settled on a mid-March deadline so that students could use this new application for registration planning toward the end of March. We were able to do our prototype testing in mid-February 2020, which put us on a good path to deliver on time. However, stay-at-home orders for COVID-19 pushed our workload back quite a bit, resulting in a two-month delay. We were not able to obtain student feedback during the final phases of the project.

Lessons Learned

We planned to obtain initial student feedback, but that phase fell during Fall semester finals, a time when most students are unavailable. In the future, with careful planning, we should be able to obtain initial feedback months in advance.

Certain terminology from the main campus application was used in the initial phases of this project and needed to be modernized sooner. Staff who hadn’t used the main campus degree audit application could have been brought up to speed more during the initial phases.